Project Introduction

As part of my Simulation for Human

Movement (ME485) and Engineering Design

Optimization (AA222) coursework at

Stanford, my team explored the

predictive ability of various neural

networks to predict ground reaction

forces (GRF) from electromyography (EMG)

sensor

data inputs.

Abstract

The development of musculoskeletal models allows us to diagnose and treat pathological movement

patterns as well as evaluate and increase athletic performance. High quality models are typically

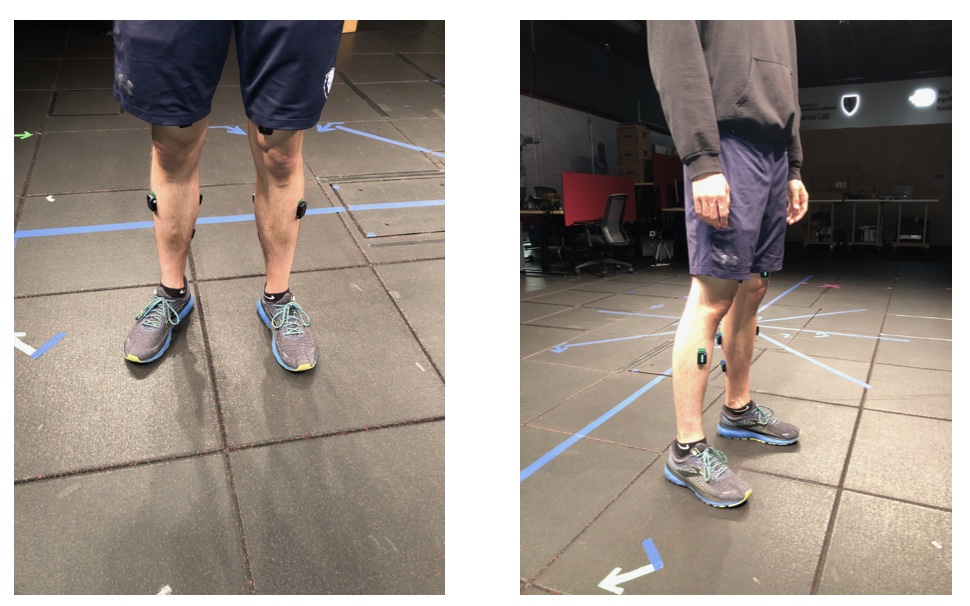

the result of over-constrained optimization problems informed by many measurements. Electromyography

(EMG) measures muscle activation, internal measurement units (IMUs) measure movement, force plates measure

ground reaction forces (GRFs), and motion-capture systems measure kinematics. GRFs are foundational in many

biomechanical analyses but their direct measurement typically limits the scope of a study because force plates

restrict the field through which a subject can move. In contrast, EMG measurements can be gathered remotely with

untethered sensors attached directly to the subject. Consequently, indirect measurement of GRFs, predicted via a

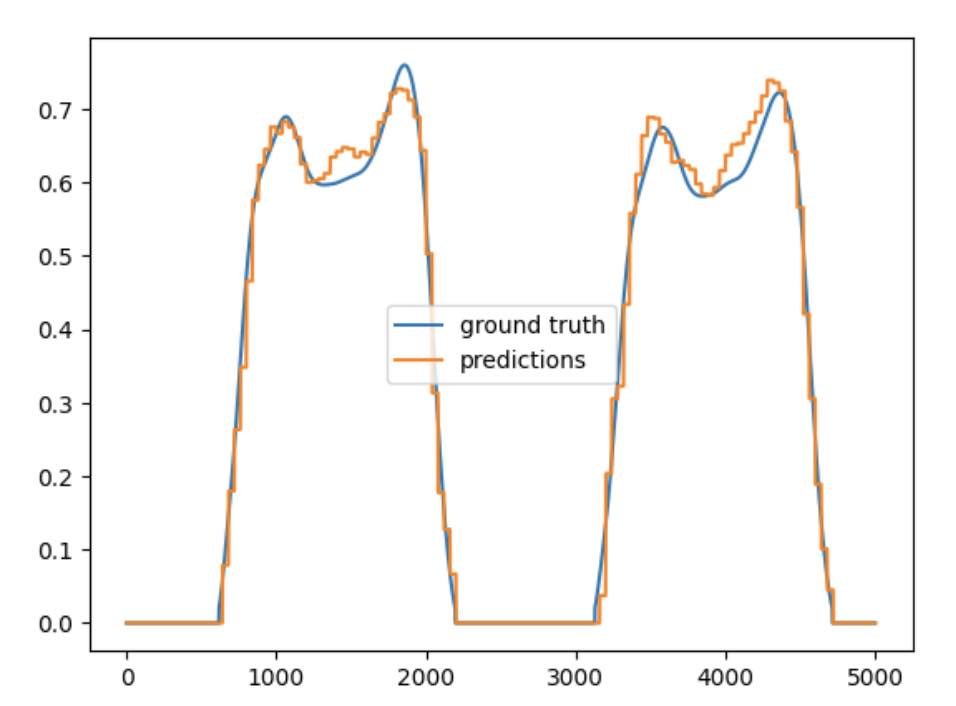

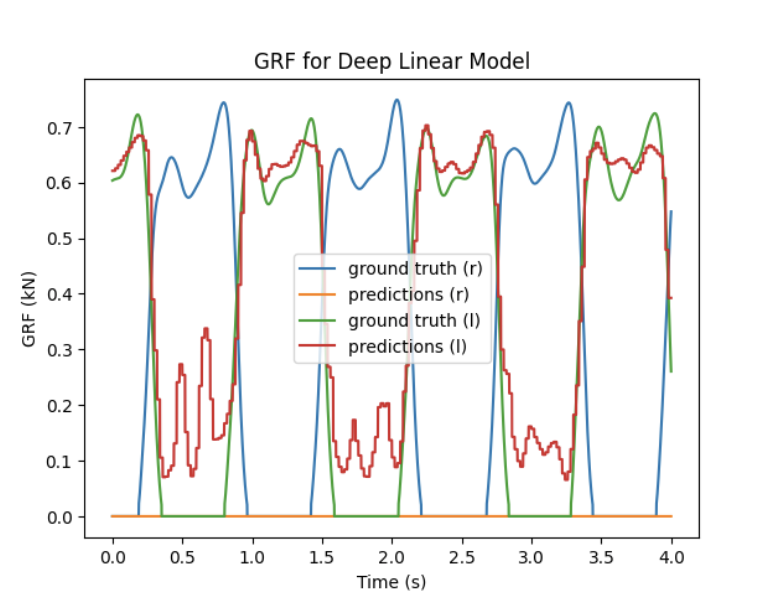

model, would allow for a wider variety of experiments. Here we demonstrate that machine learning architectures are

capable of modeling the relationship between EMG data and GRFs and can compute GRF estimations in real time. We

evaluate the performance of four neural networks trained on paired EMG and GRF data captured as three subjects walked

at a steady pace. The models can independently predict forces for each limb and identify gait cycle features like

heel-contact and push-off. While this study only examines subjects with typical gait patterns performing a single

activity, the success of the models motivates more analysis of how we can indirectly measure GRFs. More broadly,

results like this validate tactics to design studies with a wider variety of motions, not limited to the area covered

by a force plate.